Shipping Information

Express Computer Systems can ship to virtually any address in the world and provides you with the best

shipping rates possible. Cost of shipping varies depending on what service you require, your destination

and size of the shipment. Shipping costs will be calculated separately from your order and billed to you

post purchase. In the notes section of your order please provide us with your preferred shipping method

and we will get back to you.

Custom configured orders ship within 2 business days after all required products are in stock.

Please allow 3 business days for products that are not in stock to arrive to our facility in Irvine,

California.

Domestic Shipments

-

Standard Ground

- Shipping time: 2-7 business days

- Order processing time: 0-3 business days

- Delivery time after order is placed: 2-10 business days

- Excludes weekends, holidays or weather delays.

-

3 Business Days

- Shipping time: 3 business days

- Order processing time: 0-3 business days

- Delivery time after order is placed: 3-6 business days

- Excludes weekends, holidays or weather delays.

-

2 Business Days

- Shipping time: 2 business days

- Order processing time: 0-3 business days

- Delivery time after order is placed: 2-5 business days

- Excludes weekends, holidays or weather delays.

-

Overnight / Next Business Day

- Shipping time: 1 business days

- Order processing time: 0-3 business days

- Delivery time after order is placed: 1-4 business days

- Excludes weekends, holidays or weather delays.

International Shipments

-

International Economy

- Shipping time: 4-6 business days

- Order processing time: 0-3 business days

- Delivery time after order is placed: 5-9 business days

- Excludes weekends, holidays or weather delays.

-

International Priority

- Shipping time: 1-3 business days

- Order processing time: 0-3 business days

- Delivery time after order is placed: 2-6 business days

- Excludes weekends, holidays or weather delays.

For more information on shipping view our Shipping Information page or send us a message

ECS Warranty Information

Express Computer Systems (ECS) provides a 1 year parts warranty against defects in material or

workmanship that will affect the products functionality. All refurbished items are tested prior to

shipping to guarantee up-time upon arrival. If you are having issues with an item we provided

please Contact Us and we will do our best to assist you.

What does our ECS warranty cover?

We offer 1 year warranty as standard and additional

years can be added to fit your needs. Please reach out to a sales associate via the Contact Us page or live chat for more

information on extended coverage options. During the coverage period if your product fails we

will offer a Next Business Day replacement. If we are unable to replace the product we will

refund its original selling price. Shipping costs and tax are not refundable. Express Computer

Systems retains the right to decide whether the goods will be replaced or

refunded.

What is not covered by our warranty?

Our warranty does not cover any problem caused

by the following conditions:

- accidents; misuse; carelessness; electrostatic discharge; shock; temperature or humidity beyond

the specifications of the product; improper installation; operation; modification;

- any improper use that contravenes the instructions in the user manual;

- problems caused by other equipment. Our warranty is void if the product is returned with

damaged, removed, counterfeit labels, or any modifications (including any component or external

cover removal). Our warranties do not cover data loss or damages to any other equipment. In

addition, our warranties do not cover incidental damages, consequential damages, or costs

related to data recovery, removal, and installation.

Important notes:

- Please ask for additional warranty/coverage that you may require in addition to the standard 1

ECS warranty prior to final purchase. Requesting additional warranty after your purchase can be

denied.

- FREE technical support is provided on purchased items via phone, email, or live chat.

About Express Computer Systems

Express Computer Systems is committed to transforming our customers’ IT infrastructure with high quality

products at affordable costs. Our sales reps and customer support engineers understand our customers

rely heavily on advanced technology to run their business but need to do so without breaking the bank.

We specialize in building custom server configurations, custom storage solutions, networking solutions,

and components from top manufacturers including HPE, Dell, Cisco, NetApp, Dell EMC, HPE Nimble and many

others.

Same-Day Shipping At Express Computer Systems, we know hardware emergencies can happen at anytime. We also

understand that you want to receive the best hardware as quickly as possible. At Express

Computer Systems we'll ship the majority of our orders out the same day payment is made. Curious

if the item you need will make it out on time? Feel free to contact us directly via the Contact

Us link at the bottom, Live Chat feature or (800) 327-0730.

Buy, Sell, Rent, Lease, Trade-In At Express Computer Systems we not only will sell you equipment at a great value, but we'll also

purchase your used Dell, Cisco, NetApp or other networking equipment. Feel free to contact us

with the model of the items you're looking to sell and we'll get you a quote ASAP. We also have

flexible financing options for your purchasing needs.

In-House Configuration and Testing Lab Every item we sell is tested on-site in our state-of-the-art hardware engineering lab. We use

certified technicians to test every piece of equipment as well as updating all necessary

firmware. The highest quality of testing and certification is guaranteed. We warranty all of our

items we sell. Ask us today about warranty for a specific item.

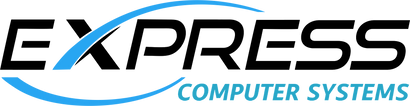

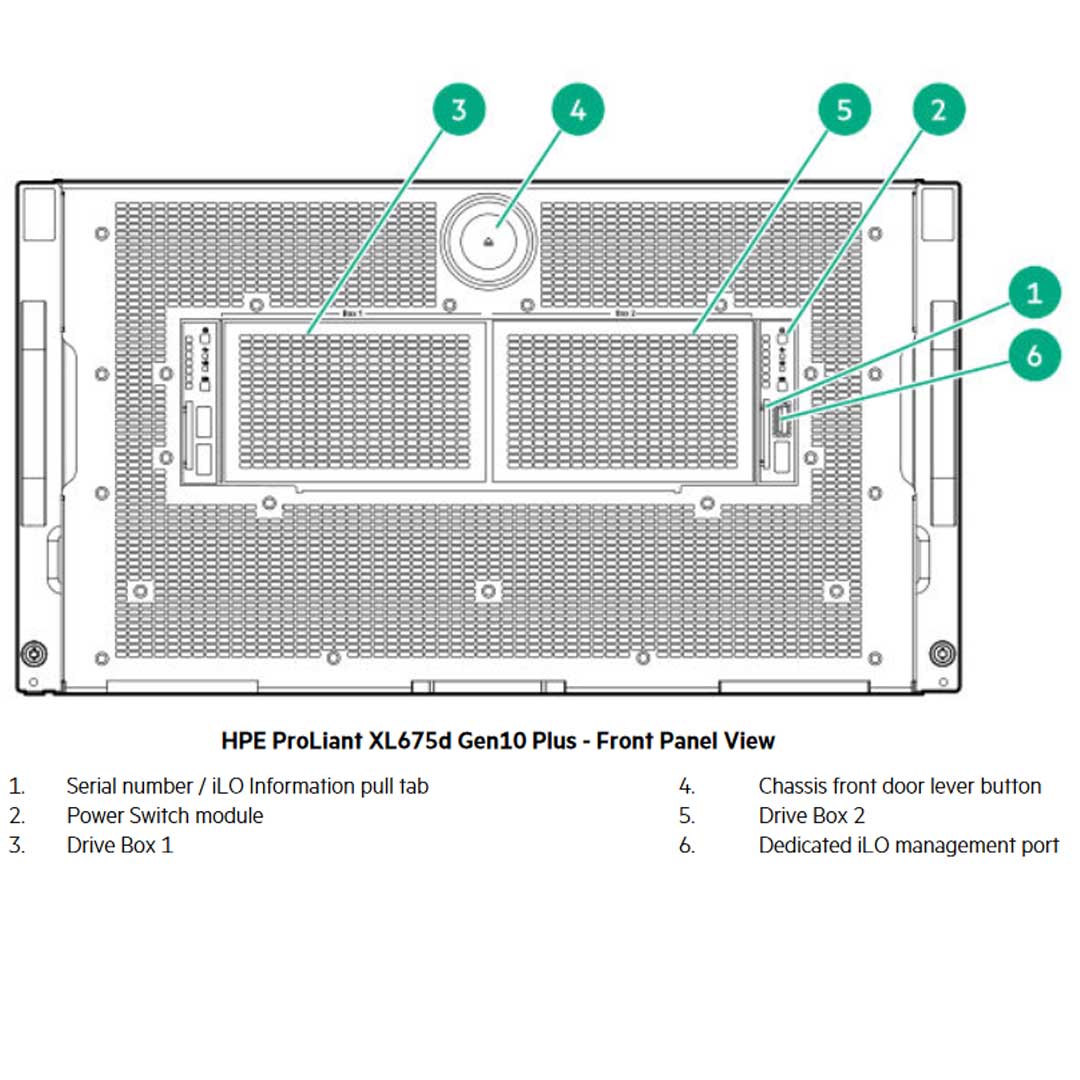

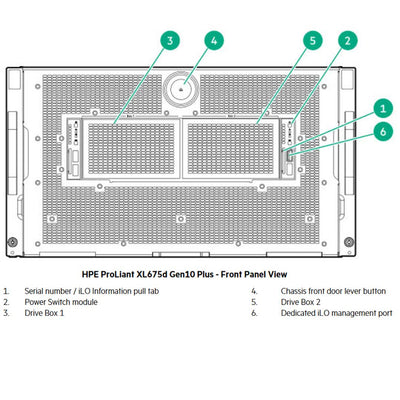

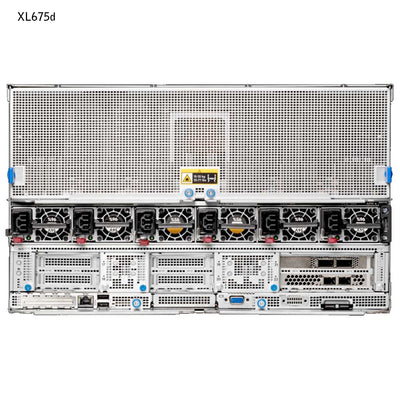

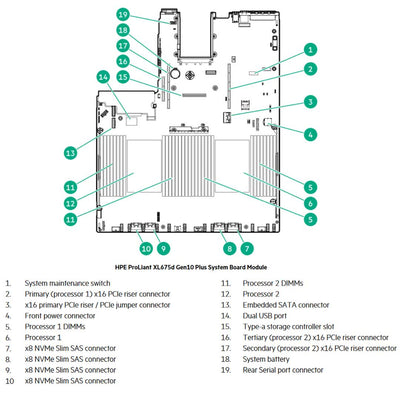

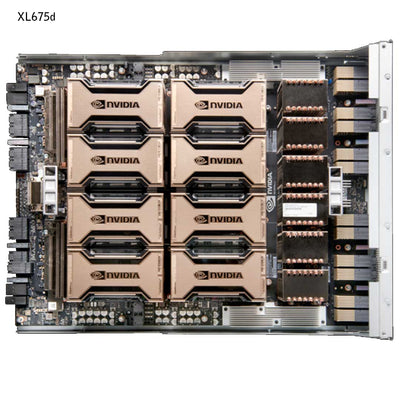

HPE XL675d Full Width Node P19725-B21

HPE XL675d Full Width Node P19725-B21

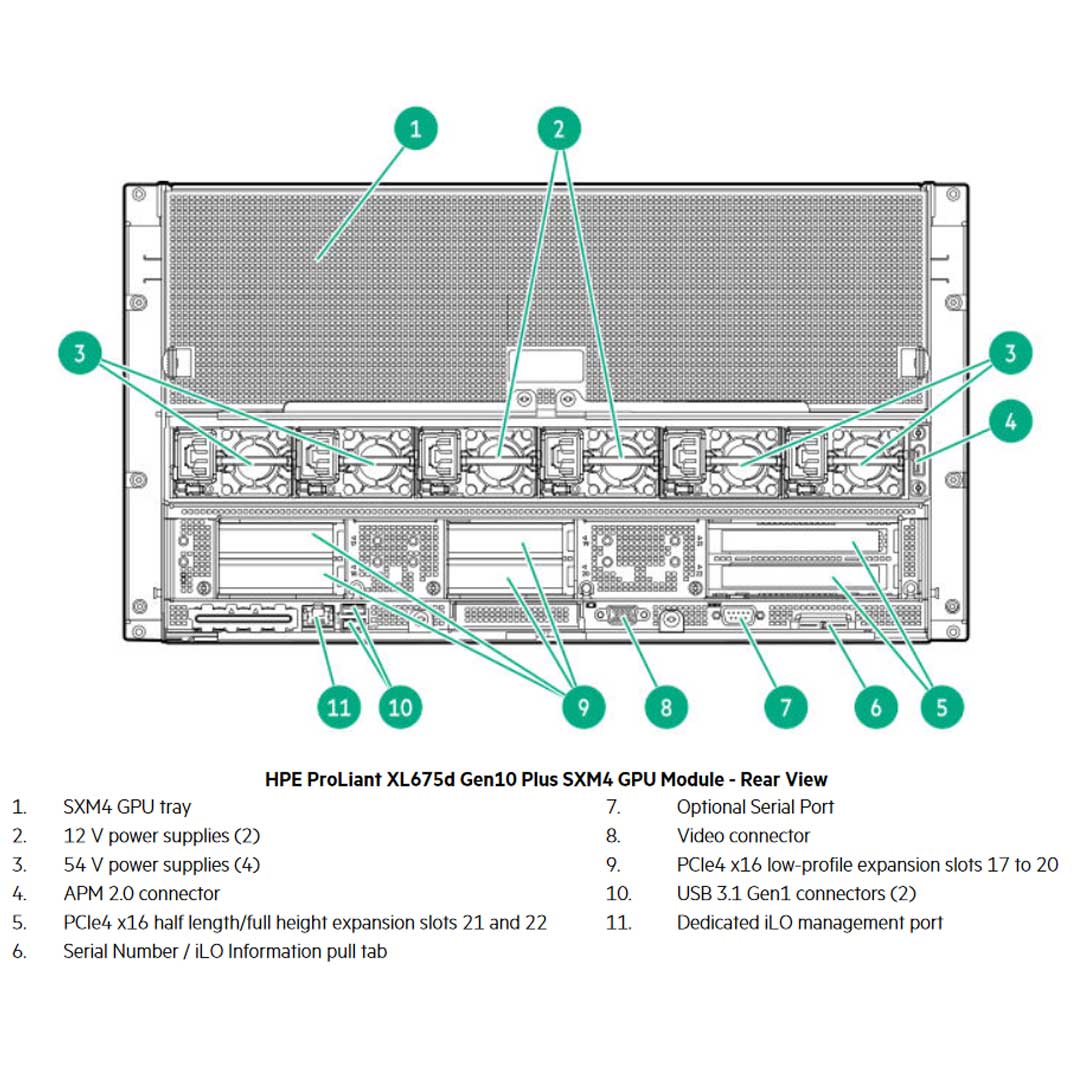

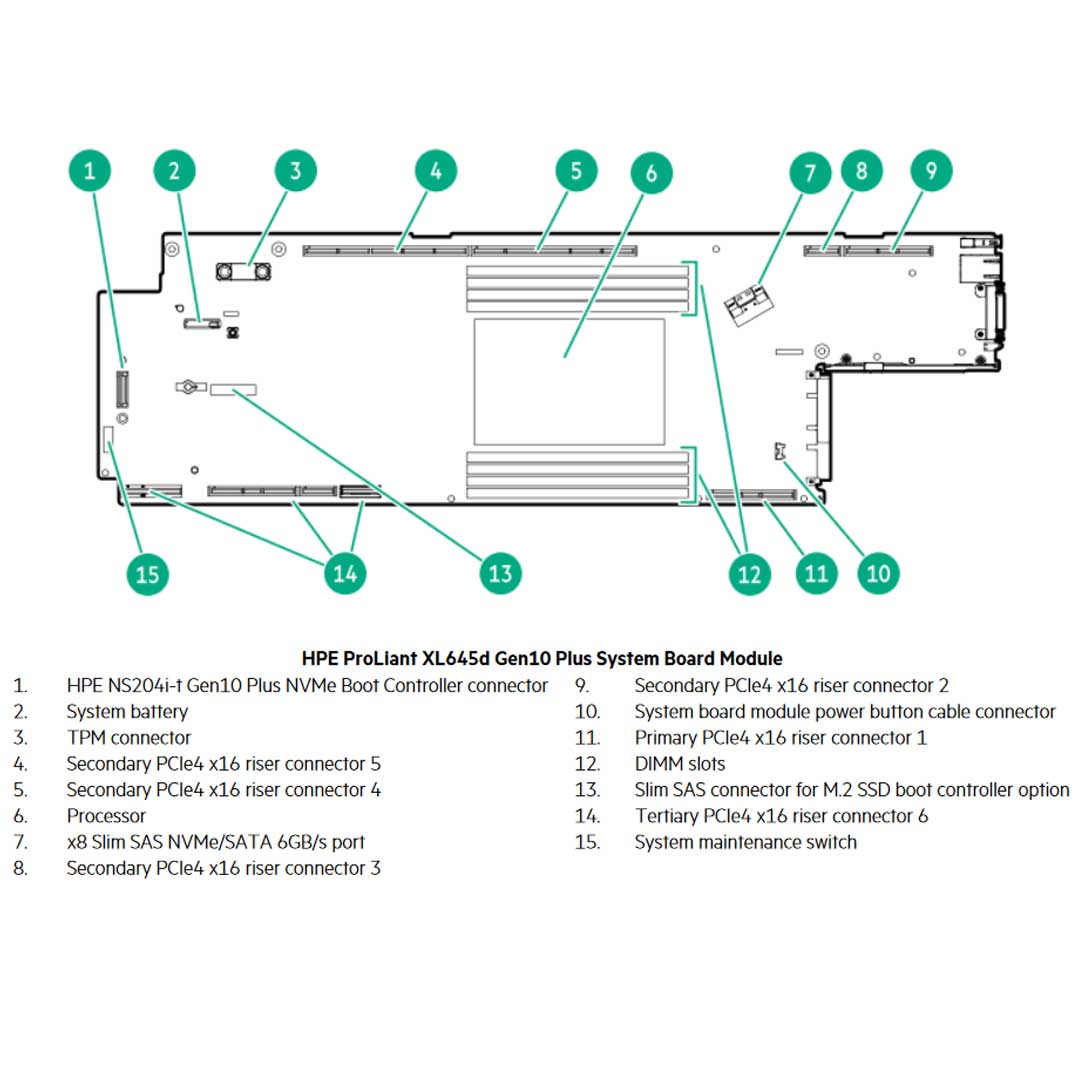

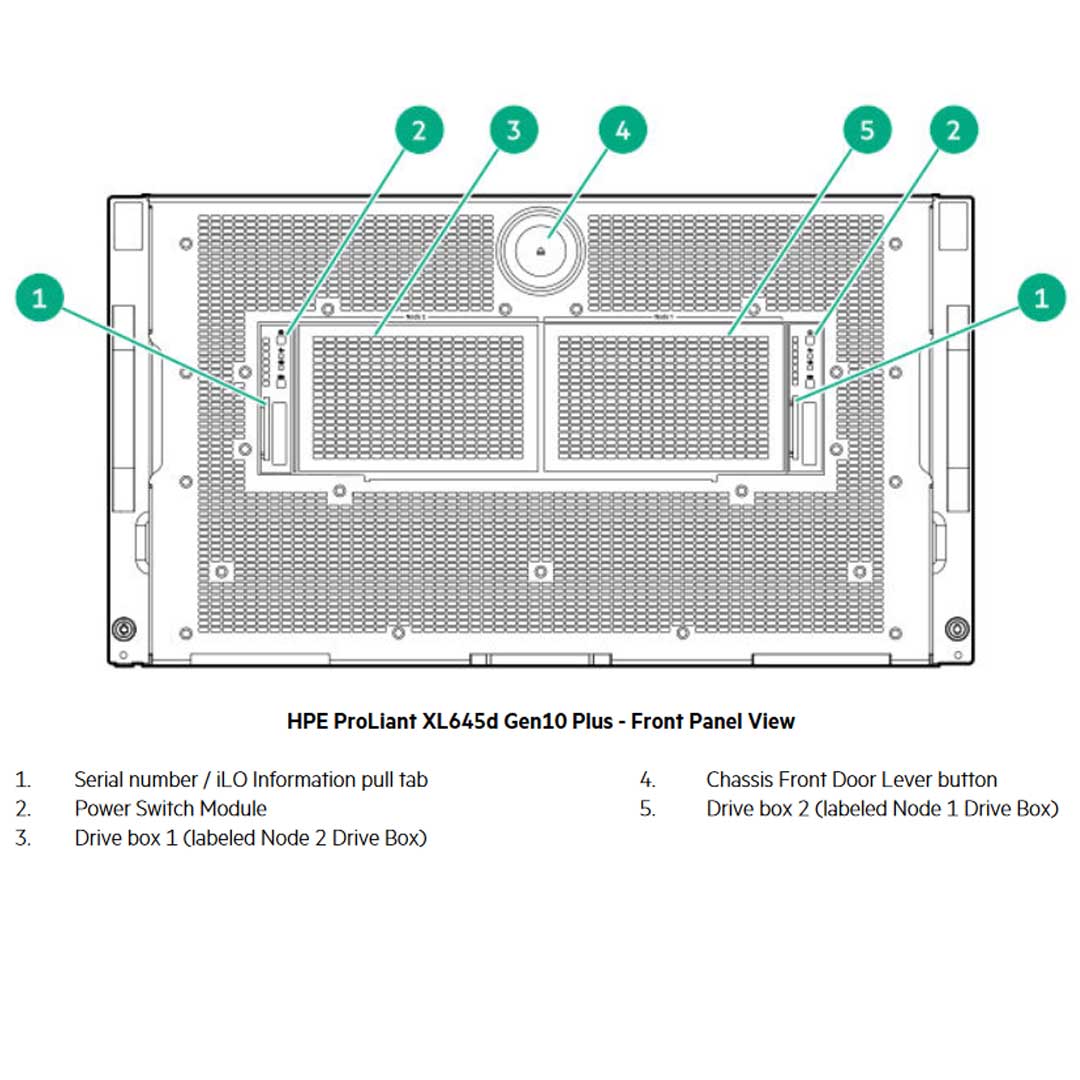

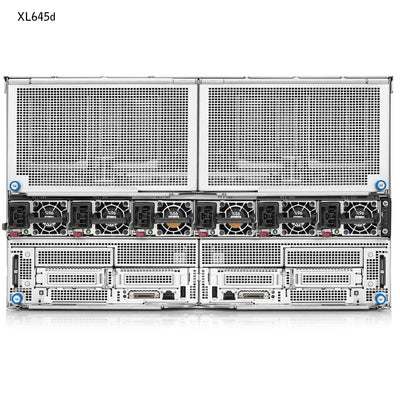

HPE XL645d Half Width Node P19726-B21

HPE XL645d Half Width Node P19726-B21 HPE ProLiant XL645d GPU Options

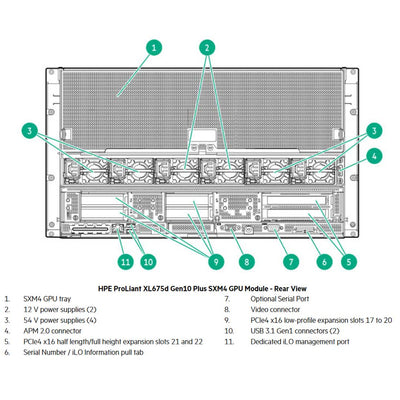

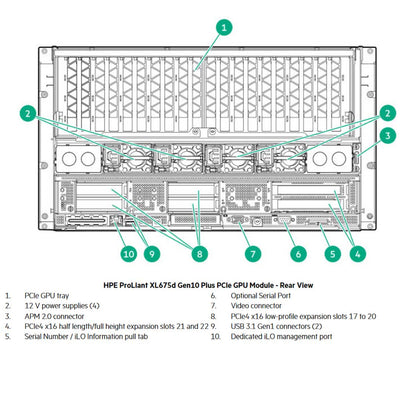

HPE ProLiant XL645d GPU Options HPE ProLiant XL675d GPU Options

HPE ProLiant XL675d GPU Options