The NEW Dell PowerEdge R760 is a 2U, two-socket rack server. Gain the performance you need with this full-featured enterprise server, designed to optimize even the most demanding workloads like Artificial Intelligence and Machine Learning.

The Dell PowerEdge R760 offers powerful performance in a purpose-built, cyber-resilient, mainstream server.

Air-cooled at peak performance

- New Smart Flow chassis optimizes airflow to support the highest core count CPUs in an air-cooled environment within the current IT infrastructure.

- Support for up to 16 x 2.5” drives and 2 x 350 watt processors.

Max Performance

- Add up to two Next Generation Intel® Xeon® Scalable processors with up to 56 cores for faster and more accurate processing performance.

- Accelerate in-memory workloads with up to 32 DDR5 RDIMMS up to 4400 MT/sec (2DPC) or 4800 MT/sec for 1DPC (16 DDR5 RDIMMs max).

- Support for GPUs including 2 x double-wide or 6 x single-wide for workloads requiring acceleration.

Gain agility

- Achieve maximum efficiency with multiple chassis designs that are tailored to your desired workloads and business objectives.

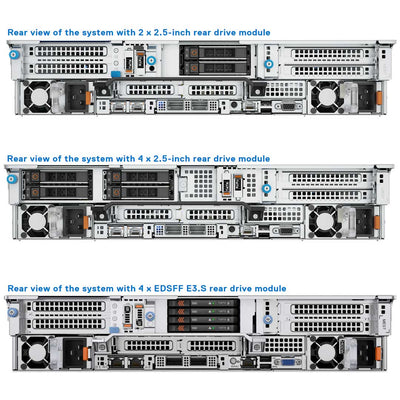

- Storage options include up to 12 x 3.5” SAS3/SATA; or up to 24 x 2.5” SAS4/SATA, plus up to 24 x NVMe U.2 Gen4, 16 x NVME E3.S Gen5*.

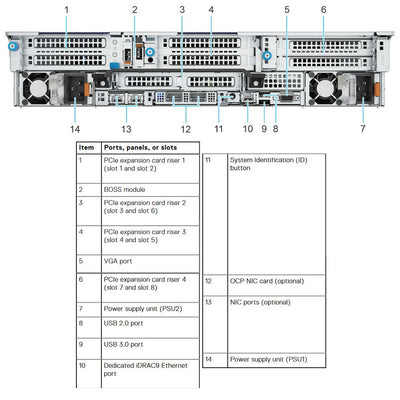

- Multiple Gen4 and Gen5 riser configurations (up to 8 x PCIe slots) with interchangeable components that seamlessly integrate to address customer needs over time.

Dell PowerEdge R760 Drive Options

16 SFF Configuration OptionsThere is no 16-bay backplane. Each of these configurations below is built with multiple 8SFF Backplanes.

- 16 x 2.5" (8 x NVMe RAID + 8 x NVMe RAID) Dual PERC H965i

- 16 x 2.5" (8 x NVMe Direct + 8 x NVMe Direct)

- 16 x 2.5" (8 x SAS4/SATA + 8 x SAS4/SATA)

- 16 x 2.5" (8 x NVMe RAID + 8 x NVMe RAID)

- 16 x 2.5" (8 x SAS4/SATA + 8 x SAS4/SATA) + 8 x 2.5" NVMe Direct

Looking for more drive?There are so many options we had to split them up to make it easier to understand the scale the R760 can perform.

12x 3.5" SAS/SATA

8x 2.5" SAS/SATA/NVMe

8x 2.5" NVMe

8x 2.5" EDSFF E3.S

24x 2.5" SAS/SATA/NVMe

24x 2.5" NVMe Passive

24x 2.5" NVMe Switched

Processor, Heatsink & Fans

Processors & HeatsinkUp to 2 x 4th Gen Intel® Xeon® Scalable or Intel® Xeon® Max Processors with up to 56 cores

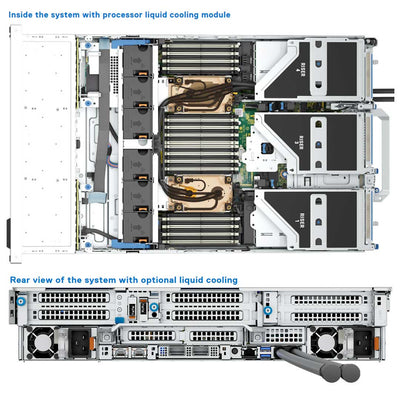

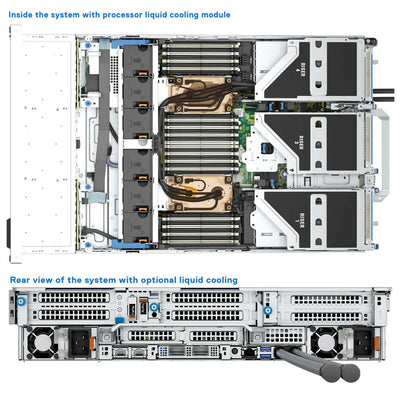

There are three types of heat sinks for this Dell R760 Rack server. The heat sink option is based on the configuration and the TDP of the CPU. Any TPD above 250W will be liquid-cooled.

-

STD HSK ≤ 165 W (supports only 2.5-inch drives and non-GPU configuration)

-

2U HPR HSK 165 W–350 W (supports 2.5-inch drives and non-GPU configuration)

-

L-type HSK Supports all GPU/FPGA configurations

Liquid CoolingAny Processors TPD above 250W will need the liquid-cooled system installed.

CPUs 9480, 9470, 8470Q and 6458Q are supported only in liquid cooling configuration.

There are two different cooling systems for this Dell Gen 16 Server. Either air-cooled or Liquid Cooled. If the DLC is installed on the CPUs then the rear VGA port will be removed. There is an option to replace the VGA port in the front or the rear, but the rear option takes up a PCIe slot.

FansAll GPU/FGPA cards require 1U L-type HSK and GPU shroud.

GPU configuration supports only High performance Gold (HPR Gold) fan.

With fans, each system comes with 6 hot swap fans. The fans change based on the configuration and TDP of the CPU just like the heat sink. Below are the types of fans the system supports.

Up to six hot-swap fans

- Standard (STD) fans

- High-performance Silver (HPR Silver) fans

- High-performance Gold (HPR Gold) fans

- For TDP that are 250W+ requires DLC

Rear Drive FansIf you do plan to install the rear drive in the Dell R760 Rack Server, then you will need to install the High-Performance Gold Fan (HPR GOLD Fan) unless the CPU TDP is 270W or greater.

When installing the rear drives, each cage will need an extra fan to support the rear drives. Each rear Drive cage also has an airflow baffle that will need to be installed as well. These will be installed when you order the rear drives.

Rear drives supported on the 12 LFF and the 24 SFF Chassis.

Supported Memory

Accelerate in-memory workloads with up to 32 DDR5 RDIMMS up to 4400 MT/sec (2DPC) or 4800 MT/sec for 1DPC (16 DDR5 RDIMMs max)

| DIMM Type |

DIMM Rank |

DIMM Capacity |

Single Processor |

Dual Processor |

| Minimum system capacity |

Maximum system capacity |

Minimum system capacity |

Maximum system capacity |

| DDR5 RDIMM |

Single Rank |

16 GB |

16 GB |

256 GB |

32 GB |

512 GB |

| Dual Rank |

32 GB |

32 GB |

512 GB |

64 GB |

1 TB |

| Dual Rank |

64 GB |

64 GB |

1 TB |

128 GB |

2 TB |

| Quad Rank |

128 GB |

128 GB |

2 TB |

256 GB |

4 TB |

| Octa Rank |

256 GB |

256 GB |

4 TB |

512 GB |

8 TB |

Storage Controllers Options

Storage ControllersDell RAID controller options offer performance improvements, including the fPERC solution. fPERC provides a base RAID HW controller without consuming a PCIe slot by using a small form factor and high-density connector to the base planar

This system supports PERC11 and PERC12 controllers. You can even install dual controllers, one fPERC, and one APERC card. Please refer to the manual cabling section to understand the configurations supported.

| Supported storage controller cards |

|---|

| Internal controllers |

PERC H965i

PERC H755

PERC H755N

PERC H355 |

| External controllers |

HBA355e

PERC H965e |

| Internal Boot |

Boot Optimized Storage Subsystem (BOSS-N1): HWRAID 2 x M.2 NVMe SSD

USB |

| Software RAID |

S160 |

| SAS Host Bus Adapters (HBA) |

HBA355i |

|

NOTE: PowerEdge does not support Tri-Mode, the mixing of SAS, SATA, and NVMe behind the same controller. |

|

NOTE: The Universal Backplane supports HW RAID for SAS/SATA with direct attach NVMe, and does not support HW RAID for NVMe. |

BOSS-N1 Hot-Swap Boot Options

Like most Dell systems, this server has a Boot option with 2x M.2 NVMe drives in the optional BOSS-N1 card. The BOSS-N1s position will change based on what Risers or rear drives are installed.

Installing the BOSS-N1 card carrier does not require the system to be powered off. System shutdown is only required when installing the BOSS-N1 controller card module.

| Components in kit |

R760 (quantity) |

| BOSS-N1 controller card module |

1 |

| BOSS-N1 card carrier |

1 or 2* |

| M.2 NVMe SSD |

1 or 2* |

| M.2 NVMe SSD capacity label |

1 or 2† |

| BOSS-N1 card carrier blank |

1 |

| M3 x 0.5 x 4.5 mm screws |

1 |

| BOSS-N1 power cable for Riser 1 (220 mm) |

1 |

| BOSS-N1 signal cable for Riser 1 (170 mm) |

1 |

| BOSS-N1 power cable for x4 rear drive module (260 mm) |

1 |

| BOSS-N1 signal cable for x4 rear drive module (240 mm) |

1 |

Networking Adapters Options

The Dell Gen16 R760 Rack Server has 2 options for NIC support. You have the LOM card or the MIC card. Only one can be installed. But you also have the OCP 3.0 slot that can be filled no matter if the LOM or MIC is selected.

LAN on Motherboard (LOM)

On the Dell R760 the standard LOM is Optional and supports 2x1Gb with BCM5720 LAN controller.

Management Interface card (MIC)

If you install a Dell Data Processing Unit (DPU) card you will need to install the MIC. If you install the MIC you are not able to install a LOM card since the MIC uses the LOM ports.

Open Compute Project (OCP) 3.0

- 1GbE x 4

- 10 GbE x 2

- 10 GbE x 4

- 25 GbE x 2

- 25 GbE x 4

One thing to note about the OCP 3.0 slot, if you install an x16 card it will be downgraded to an x8 due to the OCP limitations on this system board. Another major difference between the LOM and the OCP is the number of ports each can handle. The LOM only supports two ports while the OCP 3.0 can support a 4 Port card.

Expansion Slots

Up to 8 x PCIe Gen4 or up to 4 x PCIe Gen5 Slots. This system has 4 Riser Slots and 15 Riser Cards with 14 Different PCIe Riser Configurations. Please take your time when reviewing the risers and the configuration, if you need more help, contact us for support. Below is a table showing you the riser configurations and which riser cards go into which configuration.

| Config No. |

Riser configuration |

No. of Processors |

PERC type supported |

Rear storage possible |

| 0 |

NO RSR |

2 |

Front PERC |

No |

| 1 |

R1B+R2A+R3B+R4B |

2 |

Front PERC/PERC Adapter |

No |

| 2 |

R1Q+R2A+R3B+R4Q |

2 |

Front PERC/PERC Adapter |

No |

| 3-1 |

R1P+R2A+R3B+R4P (HL) |

2 |

Front PERC/PERC Adapter |

No |

| 3-2 |

R1P+R2A+R3B+R4P (FL) |

2 |

Front PERC/PERC Adapter |

No |

| 4-1 |

R1P+R2A+R3B+R4R (HL) |

2 |

Front PERC/PERC Adapter |

No |

| 5-1 |

R1R+R2A+R3A+R4P (HL) |

2 |

Front PERC/PERC Adapter |

No |

| 5-2 |

R1R+R2A+R3A+R4P (FL) |

2 |

Front PERC/PERC Adapter |

No |

| 6 |

R2A+R4Q |

2 |

Front PERC/PERC Adapter |

Yes |

| 7 |

R1Q+R2A+R4Q |

2 |

Front PERC/PERC Adapter |

Yes |

| 8 |

R1B+R2A |

1 |

PERC Adapter |

No |

| 9 |

R1Q+R2A+R4R |

1 |

Front PERC |

No |

| 10-1 |

R1P+R2A+R4R (HL) |

1 |

Front PERC |

No |

| 10-2 |

R1P+R2A+R4R (FL) |

1 |

Front PERC |

No |

| 11 |

R1 Paddle + R2A + R3B + R4 Paddle |

2 |

N/A |

No |

| 12 |

R1Q+R2A+R4Q |

2 |

Front PERC/PERC Adapter |

Yes |

Riser Cards

Riser 1B 2x16 (R1B)

Riser 1R 2x16 (R1R)

Riser 1R-FL 2x16 (R1R-FL)

Riser 1P 1x16 (R1P)

Riser 1P-FL 1x16 (R1P-FL)

Riser 1Q 2x16 (R1Q)

Riser 2A 2x16 (R2A)

Riser 3A 1x16 (R3A)

Riser 3A-FL 1x16 (R3A-FL)

Riser 3B 2x8 (R3B)

Riser 4P 1x16 (R4P)

Riser 4P-FL 1x16 (R4P-FL)

Riser 4B 2x16 (R4B)

Riser 4Q 2x16 (R4Q)

Riser 4R 2x16 (R4R SNAPI)

Paddle Card Kit

GPU Options

Up to two double-wide 350 W, or six single-wide 75 W accelerators. If GPUs are installed the Air Shroud will need to be changed from the default Air Shroud to the GPU Air Shroud. The GPU air shroud also has a “filler” that must be installed if a single-width GPU card or empty riser is used.

All GPU/FGPA cards require 1U L-type HSK and GPU shroud.

- Double Width (DW) GPU only supported on Riser Configurations 3-2, 5-2, or 10-2

- RC 5-2: Supports DW GPU only on slot 7

- RC 10-2: Only supports a single processor configuration.

- RC 10-2: Supports DW GPU only on slot 2.

| Components |

GPU FL Kit |

| Details |

Quantity |

| Risers |

Riser configuration (RC) 3-2, 5-2*, or, 10-2* |

RC 3-2: R1P^ (FL) + R2A (HL) + R3B (HL) + R4P^ (FL)

RC 5-2: R1R (FL) + R2A (HL) + R3A (FL) + R4P^ (FL)

RC 10-2: R1P^ (FL) + R2A (HL) + R4R (FL) |

| Shroud |

GPU shroud |

1 |

| Fans |

HPR GOLD fan |

6 |

| Heat sinks |

L-type heat sink for processor 1 and processor 2 |

RC 3-2, 5-2: 2

RC 10-2: 1 |

| Cables |

Power cable |

2 x 4 (8-position) or 2 x 6 + 1 x 4 (12-position + 4-sideband) |

| FL - Full Length, HL - Half Length, HPR - High Performance, RC - Riser configuration |

| 12 x 3.5-inch and rear drive configuration systems do not support a GPU card. |

| 12 x 3.5-inch and rear drive configuration systems do not support a GPU card. |

Power, Dimensions & Weight:

PowerTwo redundant Hot-Swap AC or DC power supply units.

- 2800 W Titanium 200—240 VAC or 240 HVDC,

- 2400 W Platinum 100—240 VAC or 240 HVDC,

- 1800 W Titanium 200—240 VAC or 240 HVDC,

- 1400 W Platinum 100—240 VAC or 240 HVDC,

- 1100 W Titanium 100—240 VAC or 240 HVDC,

- 1100 W LVDC -48 — -60 VDC,

- 800 W Platinum 100—240 VAC or 240 HVDC,

- 700 W Titanium 200—240 VAC or 240 HVDC,

Dimensions & Weight: Width with Ears: 482.0 mm (18.97")

Width without Ears: 434.0 mm (17.08")

Height: 86.8 mm (3.41")

Length: 736.29 mm (28.98") Ear to PSU handle

Weight: A server with fully populated drives 36.1 kg (79.58 lbs)

A server without drives and PSU installed 25.1 kg (55.33 lbs)

12x 3.5" SAS/SATA

12x 3.5" SAS/SATA 8x 2.5" SAS/SATA/NVMe

8x 2.5" SAS/SATA/NVMe 8x 2.5" NVMe

8x 2.5" NVMe 8x 2.5" EDSFF E3.S

8x 2.5" EDSFF E3.S 24x 2.5" SAS/SATA/NVMe

24x 2.5" SAS/SATA/NVMe 24x 2.5" NVMe Passive

24x 2.5" NVMe Passive 24x 2.5" NVMe Switched

24x 2.5" NVMe Switched

Riser 1B 2x16 (R1B)

Riser 1B 2x16 (R1B) Riser 1R 2x16 (R1R)

Riser 1R 2x16 (R1R) Riser 1R-FL 2x16 (R1R-FL)

Riser 1R-FL 2x16 (R1R-FL) Riser 1P 1x16 (R1P)

Riser 1P 1x16 (R1P) Riser 1P-FL 1x16 (R1P-FL)

Riser 1P-FL 1x16 (R1P-FL) Riser 1Q 2x16 (R1Q)

Riser 1Q 2x16 (R1Q) Riser 2A 2x16 (R2A)

Riser 2A 2x16 (R2A) Riser 3A 1x16 (R3A)

Riser 3A 1x16 (R3A) Riser 3A-FL 1x16 (R3A-FL)

Riser 3A-FL 1x16 (R3A-FL) Riser 3B 2x8 (R3B)

Riser 3B 2x8 (R3B) Riser 4P 1x16 (R4P)

Riser 4P 1x16 (R4P) Riser 4P-FL 1x16 (R4P-FL)

Riser 4P-FL 1x16 (R4P-FL) Riser 4B 2x16 (R4B)

Riser 4B 2x16 (R4B) Riser 4Q 2x16 (R4Q)

Riser 4Q 2x16 (R4Q) Riser 4R 2x16 (R4R SNAPI)

Riser 4R 2x16 (R4R SNAPI) Paddle Card Kit

Paddle Card Kit