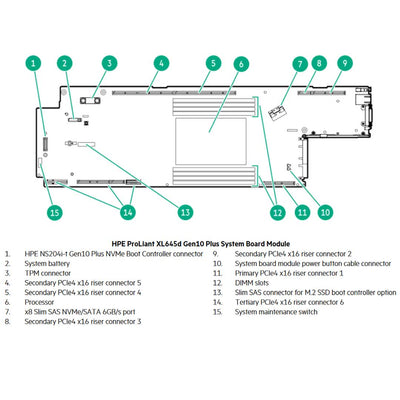

HPE ProLiant XL645d Gen10 Plus Node Server Chassis | P19726-B21

Built for the Exascale Era the HPE Apollo 6500 Gen10 Plus Systems accelerates performance with powered by NVIDIA HGX A100 Tensor Core GPUs with NVLink or AMD Instinct™ MI200 with 2nd Gen Infinity Fabric™ Link to take on the most complex HPC and AI workloads. This purpose-built platform provides enhanced performance with premier GPUs, fast GPU interconnects, high-bandwidth fabric, and configurable GPU topology, providing rock-solid reliability, availability, and serviceability (RAS). Configure with single or dual processor options for a better balance of processor cores, memory, and I/O. Improve system flexibility with support for 4, 8, 10, or 16 GPUs and a broad selection of operating systems and options all within a customized design to reduce costs, improve reliability, and provide leading serviceability.

What’s New

- NVIDIA H100 and AMD MI210 PCIe GPU support

- AMD Instinct™ MI00 with 2nd Gen Infinity Fabric™ Link

- Direct Liquid Cooling System fully integrated, installed, and supported by HPE. Also supporting PCIe Gen4 GPUs provides extreme compute flexibility.

- Flexible support and options: InfiniBand, Ethernet, HPE Slingshot, Ubuntu and Enterprise OS such as Windows, VMware,

- Suse, Red Hat, Choice and HPE Pointnext for advisory, professional and operational services, along with flexible consumption model across the globe.

- Enterprise RAS with HPE iLO5, easy access modular design, and N+N power supplies.

- Save time and cost, gain improved user productivity with HPE iLO5

- World’s most secure industry standard server using HPE iLO5

Know your Server - CTO Configuration Support

Need help with the configuration? Contact us today!

HPE Apollo 6500 Gen10 PLUS Base Knowledge

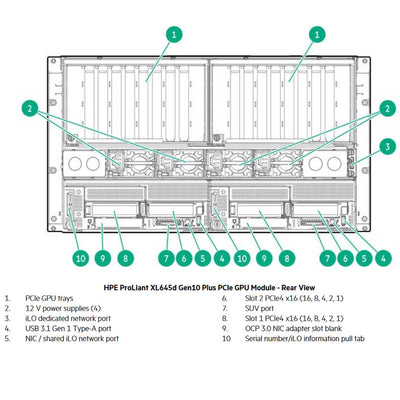

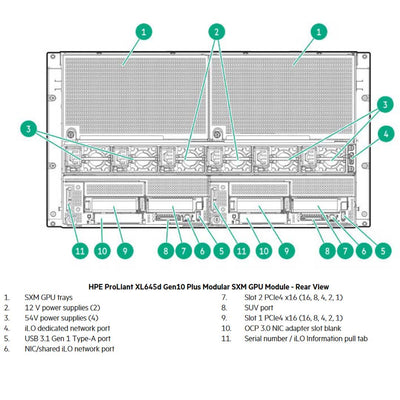

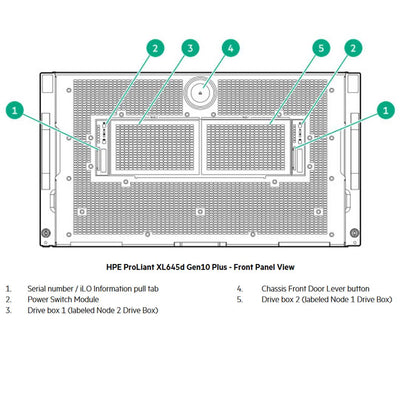

HPE Apollo d6500 Gen10 Plus Chassis (P19674-B21) is a 4U server that is very powerful and a bit unique compared to the non-Plus version. Inside this chassis, you have the ability to install two different types of Nodes. The XL645d (P19726-B21) is a 2U Half Width Node that holds 1 Processor. Or, the XL675d (P19725-B21) which is also a 2U Node but is a full-width server that holds 2 Processors. Each of these Node servers has two Accelerator Trays, one must be installed above these node servers in the 6500 Gen10 Plus chassis. You can choose the SXM4 Modular Accelerator Tray or the PCIe GPU Accelerator Tray. Each tray has a different type of PSU and accepts different GPU-style cards. Also, each node type has a different CPU, Smart arrays, cabling, and configurations. Please keep track of which server type you have selected when going through the CTO.

This CTO is split by the Node server you choose, they are pretty similar but one major difference is the amount of GPUs the accelerator tray can handle and the number of total DIMMs supported. Mixing of RDIMM and LRDIMM memory is not supported.

XL645d Max Memory

16 Total DIMMs, 8 DIMM slots per processor, 8 channels per processor, 1 DIMM per channel

Maximum capacity (LRDIMM) 1.0 TB Up to 8 128 GB LRDIMM @ 3200 MT/s

Maximum capacity (RDIMM) 512 GB Up to 8 64 GB RDIMM @ 3200 MT/s

HPE Apollo 6500 Gen10 Plus Processors

Mixing of 2 different processor models is NOT allowed.

HPE Apollo 6500 Gen10 PLUS Maximum Internal Storage

| Drive Type |

XL675d Capacity |

XL645d Capacity |

| Hot Plug SFF SATA HDD |

16 x 2 TB = 32 TB |

8 x 2 TB = 16 TB |

| Hot Plug SFF SAS HDD |

16 x 2 TB = 32 TB |

8 x 2 TB = 16 TB |

| Hot Plug SFF NVMe PCIe SSD |

16 x 15.36 TB = 245.76 TB |

16 x 15.36 TB = 245.76 TB |

| Hot Plug SFF SATA SSD |

16 x 7.68 TB = 122.88 TB |

8 x 7.68 TB = 61.44 TB |

| Hot Plug SFF SAS SSD |

16 x 15.3 TB = 244.8 TB |

8 x 15.3 TB = 122.4 TB |

One thing to note. The NVME enablement kit can only be installed Once per server, So if are using the XL645d you are able to install two for a total of 12 NVMe.

HPE Storage Controllers are a bit different for these Node serves. The XL645d does not support Flexible Smart Array cards in its Half-Width Node Server. But the XL675d does support a Flexible Smart Array. Both Support the optional M.2 Boot Device although a different card same outcome. Below will be a list of the Cable kits required for each Hard Drive configuration.

| HPE ProLiant XL645d Storage Controller Cable Kits |

|---|

| HPE Storage Configuration (Per Node) |

Cable Kit |

Enablement Card |

Backplane |

M.2 Cable Kit |

M.2 Enablement Card |

| 8 Embedded SATA |

P31487-B21 |

|

P25877-B21 |

|

|

| 8 SAS/SATA (Smart Array) |

P31488-B21 |

HPE Smart Array |

P25877-B21 |

|

|

| 2 Embedded SATA + 2 x4 NVMe |

P31483-B21 |

|

P25879-B21 |

|

|

| 2 Embedded SATA + 6 x4 NVMe |

P31482-B21 |

|

P25879-B21 |

|

|

| 2 SAS/SATA (Smart Array) + 2 x4 NVMe |

P31486-B21 |

HPE Smart Array |

P25879-B21 |

|

|

| No SFF Drives + NS204i-t M.2 Boot Device |

|

|

|

P31481-B21 |

P20292-B21 |

| 8 Embedded SATA + NS204i-t M.2 Boot Device |

P31487-B21 |

|

P25877-B21 |

P31481-B21 |

P20292-B21 |

| 8 SAS/SATA (Smart Array) + NS204i-t M.2 Boot Device |

P31488-B21 |

HPE Smart Array |

P25877-B21 |

P31481-B21 |

P20292-B21 |

| 2 SAS/SATA (Smart Array) + 4 x4 NVME |

P31484-B21 |

HPE Smart Array |

P25879-B21 |

|

|

| 8 x4 NVMe Gen4 |

P25883-B21 |

|

P25879-B21 |

|

|

| 6 x4 NVMe + NS204i-t M.2 Boot Device |

|

|

P25879-B21 |

P48120-B21 |

P20292-B21 |

| 8 SAS/SATA (Smart Array) + 6 x4 NVMe + NS204i-t M.2 Boot Device |

P31488-B21 |

HPE Smart Array |

P25879-B21 |

P48120-B21 |

P20292-B21 |

| 2 x4 NVMe + NS204i-t M.2 Boot Device |

|

|

P25879-B21 |

P59752-B21 |

P20292-B21 |

HPE Apollo 6500 Gen10 Plus XL645d Cable Kits:

- HPE XL645d Gen10 Plus 2SFF Smart Array SR100i SATA and 2SFF CPU Connected x4 NVMe Cable Kit (P31483-B21)

- HPE XL645d Gen10 Plus 2SFF Smart Array SAS and 2SFF CPU Connected x4 NVMe Cable Kit (P31486-B21)

- HPE XL645d Gen10 Plus 8SFF Embedded SATA Controller Cable Kit (P31487-B21)

- HPE XL645d Gen10 Plus 8SFF Smart Array SAS Cable Kit (P31488-B21)

- HPE XL645d Gen10 Plus M.2 Cable Kit (P31481-B21)

- HPE XL645d Gen 10 Plus 8SFF Embedded SATA Controller x4 NVMe Cable Kit (P25883-B21)

- HPE XL645d Gen 10 Plus 2SFF Smart Array SAS/SATA and 4SFF CPU Connected x4 NVMe Cable Kit (P31484-B21)

- HPE XL645d Gen 10 Plus 2SFF Smart Array SATA and 6 Switch Connected x4 NVMe Cable Kit (P31482-B21)

- HPE Apollo 6500 Gen10 Plus M.2 2 x 4NVMe Cable Kit (P59752-B21)

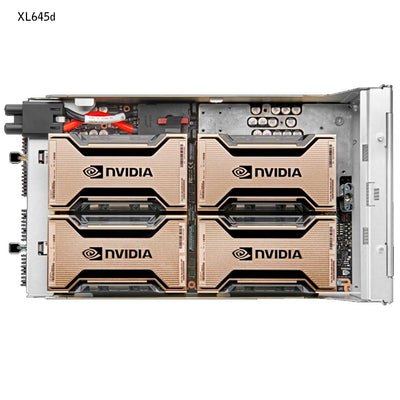

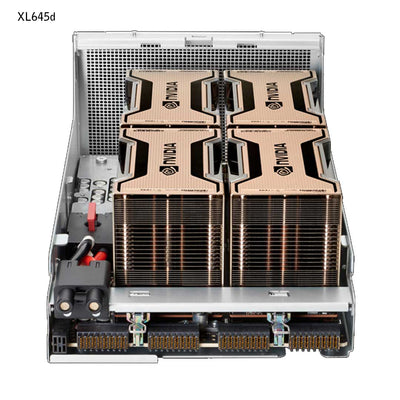

XL645d can only support 4 GPUs per server a total of 8 when two nodes are installed.

HPE NVIDIA GPU

This server is all about the GPU configuration and the ability to support SXM4 of PCI GPUs. Please take note as to which GPU you have installed and the desired configuration when choosing the GPU Accessories on this CTO.

Mixing of GPUs is not allowed.

XL645d PCI GPU Rules

For each PCI GPU, you must also order this PCIe Accelerator and Bracket v2 Cable Kit P27282-B21.

If you install the Ml100 GPU you will need to install (1) Fabric 4-way Bridge for HPE (R9B39A) every 4 GPUS.

If you choose the H100 GPU you will need to install (1) Power Cable Kit for every NVIDIA H100 GPU (P60567-B21).

For every pair of NVIDIA PCIe GPUs, you must select (3) Ampere 2-way 2-slot Bridge for HPE R6V66A.

XL645d Modular GPU Rules

Select (1) Modular Accelerator Power Cable Kit (P31489-B21) per Accelerator Tray.

You also have the choice of Air cooled or Liquid Cooled SXM4 GPUs.

HPE Apollo 6500 Gen10 PLUS Cooling

15 - 80mm dual rotor hot pluggable chassis fans. These systems also have support for Air or Liquid cooled parts like the GPU and the CPU. There is no redundancy for the fans they all come standard in the 6500 Chassis.

HPE Apollo 6500 Gen10 PLUS Flex Slot your power!

With the choice of a Modular or PCI Accelerator tray, each tray needs a different PSU setup for power usage. For Air-cooled A100 Modular Trays, you have two power tray options. Same if you have installed the Ml100 or A100 GPUs. For all other GPU support, you can simply choose any of the three single PSU options.

HPE Apollo 4510 Gen10 Lights Out! iLO

HPE iLO 5 ASIC. The rear of the chassis has 4 1GB RJ-45 ports.

HPE iLO with Intelligent Provisioning (standard) with Optional: iLO Advance and OneView

HPE iLO Advanced licenses offer smart remote functionality without compromise, for all HPE ProLiant servers. The license includes the full integrated remote console, virtual keyboard, video, and mouse (KVM), multi-user collaboration, console record and replay, and GUI-based and scripted virtual media and virtual folders. You can also activate the enhanced security and power management functionality.